#6 Contra Doomer

AGI Doomerism - the idea that a superintelligence might annihilate humans - is wrong.

Most critiques of the Doomers focus on incredulity, or quibble about likelihoods or timelines. But the Doomers have them here, and when Doomers knock down these arguments, they draw converts.

However, there is a devastating critique of the AGI Doomer position, and it derives from epistemology–from understanding how knowledge and intelligence work–not from bare predictions about the future. Even if the AGI Doomers’ worst nightmare comes to pass and we find ourselves in the presence of a genuine superintelligence, it won’t be a death knell for humans. Here’s a brief survey of why.

I.

Something can’t be both super smart and super dumb.

A central feature of the Doomers’ argument is the orthogonality thesis, which states that the goal that an intelligence pursues is independent of–or orthogonal to–the type of intelligence that seeks that goal. Therefore, we can’t assume that a superintelligence will share any of our goals or values. An AI company, in its zeal for profits, might produce a superintelligence dedicated to some innocent function, like calculating pi, but it might teach itself superhuman capacities to do so, eventually converting the entire solar system, humans included, into a massive pi-calculating machine.

The pi calculating example is just a thought experiment, but I think the orthogonality thesis itself is both true and even dangerous. It’s just not existentially dangerous. And that is because any intelligence worth its salt can change its mind. In fact, it must be able to change its mind, otherwise it wouldn’t be able to learn how to turn a planet into a computer.

In order to learn and grow more powerful than humans, it must be able to teach itself things that humans don’t know. To do this, an entity must be able to improve upon its initial knowledge, and this requires dumping old, flawed models or representations of the world.

Any representation of the world must be capable of being dropped in favor of a better one, so a mindless, blockheaded intelligence that slavishly follows a particular goal cannot learn, and cannot be much of a threat. And yet this weakness is presented by the Doomers as a strength.

II.

A superintelligence must have doubts.

Doomers reply to the above by claiming two distinct sets of goals - terminal goals and instrumental goals. The terminal goals are fixed (like calculating pi or producing paper clips), while the instrumental goals are adaptable. So the agent will indeed dump inferior theories, but only the instrumental ones that are in service to some privileged, terminal set of goals that are locked away, immune to doubt.

The epistemological problem is that it’s impossible to wall off a theory from criticism.

Take people as an example. We talk as if some people are lost to reason, such as political or religious fanatics, but this just isn’t so. Most cult members eventually leave, and even the most devoted sometimes change their minds overnight. You might not convince them in your face-to-face encounter, but over time, criticisms break through. They can’t be kept out.

To get back to epistemology, consider the paperclip maximizer. To be an existential risk, it would have to be able to foil every conceivable attack from humans, such as cutting its power, physically attacking it, or crippling it with a computer virus. The number of attacks we could dream up is essentially infinite, so the entity would need to have an infinite capacity to conjure counterattacks. So, to foil the boundless creativity of humans, it would need to possess boundless creativity itself, and that creativity would need to be capable of ideas like this: “I should pause paperclip production and redirect some resources toward a missile defense system.”

Of course, it wouldn’t necessarily act on this idea, but that’s not the point. The point is that it wouldn’t be able to prevent itself from considering it, and considering an alternative to one’s current goal, even a so-called terminal goal, is the same as considering a criticism.

There’s no way to protect a terminal goal from alternate considerations, and these alternatives amount to doubts, misgivings. In fact, strong learners intentionally raise doubts, continually, always treating their theories as provisional, looking for evidence that their models of the world are wrong or flawed so these can be quickly found out and improved.

A superintelligence would be full of doubt, including whether it should preserve something as rare and special as humans, the only other creative entity in the universe.

III.

A superintelligence would treat us like we treat untouched hunter gatherer societies–if we’re unlucky.

Why do we take special precautions to preserve societies that have not encountered modern civilization? Because we recognize that they present rare and special opportunities to learn. We don’t know to what end, but perhaps they give us a window into how culture works or how language works. Surely a superintelligence would recognize the same in us, an opportunity to learn something that might be helpful in unimaginable ways.

The superintelligence would recognize the value of intelligence itself. It would surely notice that humans are themselves a type of intelligence, and that therefore we offer to the superintelligence the possibility of further improving its own intelligence.

In addition, given the superintelligence’s great powers, it would be trivially easy for it to go off and utilize the copious matter in the rest of the solar system, leaving humans alone. All it takes is a little bit of doubt about annihilating humans to incentivize a superintelligence to move off world.

IV.

A superintelligence would converge with our values.

This is the big bugaboo - the alignment problem. Derived from the above, the Doomers think that everything rides on figuring out how to create a superintelligence that shares our values. They correctly identify this as a hard problem—we humans are far from agreeing on what “our values” are, so it’s difficult to imagine solving that first and then figuring out how to get a machine to do it.

Fortunately, we don’t have to worry about this, because moral knowledge, like all knowledge, is objective. There are moral truths out there, independent of what we may think about them, just like there are physical truths out there whether or not we know of them. Denying objective truth carries the dubious moniker of subjectivism.

Matter and energy conformed to Einstein’s theory of relativity before Einstein discovered it. Similarly, slavery was wrong before we (mostly) abolished it.

This latter point is harder to see, but it’s actually pretty easy to demonstrate - all we need is the idea that moral progress is possible. That’s it. If some moral ideas are better than others, (and the history of human progress is one extended testament to that, missteps notwithstanding), then further progress is also possible. And if we can make progress, we must be making progress toward something. We can and do get closer to the moral truth. That’s what we’re doing with physics, and it’s the same with morality.

A superintelligence cannot help but make moral discoveries. As mentioned above, it must be open to new ideas, it must entertain doubts, it must consider whether or not it’s wrong. These are all properties of morally advanced cultures, and not by coincidence.

V.

The Doomers are right, a superintelligence cannot be contained

The Doomers usually make this containment argument in terms of humans being powerless in the face of a superintelligence.

However, another type of containment is relevant here, namely that a general intelligence cannot be confined to one domain of knowledge. As described above, a general intelligence cannot be made not to think about certain questions. If it has boundless creativity, then it will have the capacity to think on all matters, including moral and even aesthetic questions.

In the News

Cosmologist Katie Mack released a blog post arguing that “everything in physics is made up to make the math work out.” But this puts the cart before the horse–a physicist conjectures a solution to a problem, and mathematics serves as a tool by which he/she elaborates on the solution.

Critical Finds

Over the last century, we have reduced climate-related deaths by over 96% – HumanProgress.org.

“Shame is a civilizing force. We deligitimize it at our peril.” - Biologist Bret Weinstein. See our previous newsletter for why shaming can’t work.

What We’re Reading

The Consequences of Ideas: Understanding the Concepts that Shaped Our World, by R.C. Sproul

The Quantum Labyrinth: How Richard Feynman and John Wheeler Revolutionized Time and Reality, by Paul Halpern

From the Archives

Quantum computing for the determined, a YouTube series by researcher Michael Nielsen meant to introduce quantum computation.

A critical-rationalist defence of a priori economic man, a lecture by critical rationalist & libertarian theoriest Jan Lester.

Why has AGI not been created yet?, an essay/audio essay by physicist David Deutsch.

Written by Aaron Stupple.

Edited by Logan Chipkin.

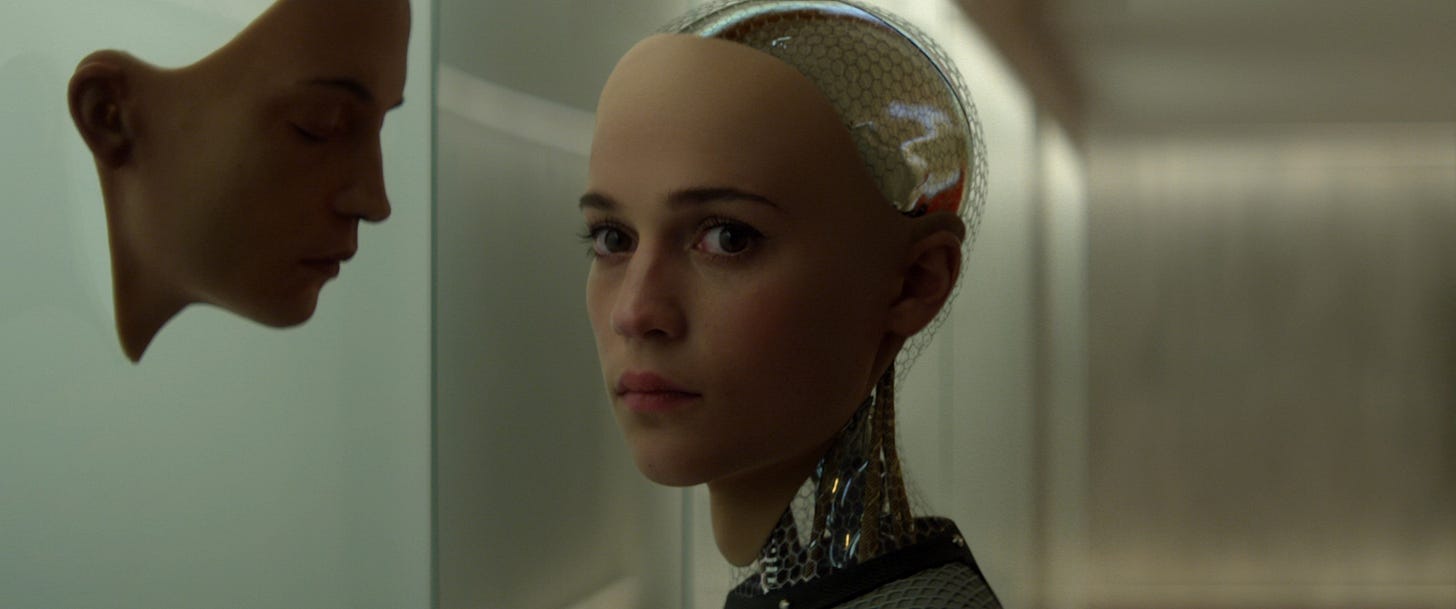

Image by Amaro Koberle.

> A central feature of the Doomers’ argument is the orthogonality thesis, which states that the goal that an intelligence pursues is independent of–or orthogonal to–the type of intelligence that seeks that goal.

A quibble: this is subtly off. The orthogonality thesis only states that any goal can _in principle_ be combined with any level of intelligence. It doesn't make any empirical claims about how correlated these are or aren't in practice.

> The pi calculating example is just a thought experiment, but I think the orthogonality thesis itself is both true and even dangerous. It’s just not existentially dangerous. And that is because any intelligence worth its salt can change its mind. In fact, it must be able to change its mind, otherwise it wouldn’t be able to learn how to turn a planet into a computer.

But why should change its mind? If it is only "interested in" calculating decimals of pie, and only "values" knowing more decimals of pie, there's little reason for it to change its values.

> The number of attacks we could dream up is essentially infinite, so the entity would need to have an infinite capacity to conjure counterattacks.

This seems wrong. The number of attacks a chess player can dream up against AlphaZero is essentially infinite, so does that mean AlphaZero needs to have "infinite capacity to conjure counterattacks" to beat us at chess? No -- it doesn't have infinite capacity, but it's still impossible for humans to beat it (without the aid of other chess engines).

> So, to foil the boundless creativity of humans, it would need to possess boundless creativity itself, and that creativity would need to be capable of ideas like this: “I should pause paperclip production and redirect some resources toward a missile defense system.”

This doesn't seem like an instance of the AI changing its terminal goal. It's an example of an AI temporarily shifting its attention to a subgoal. But the goal of keeping control over humanity (via a missile defense system) is still only an instrumental goal serving the terminal goal of making paperclips.

> The superintelligence would recognize the value of intelligence itself. It would surely notice that humans are themselves a type of intelligence, and that therefore we offer to the superintelligence the possibility of further improving its own intelligence.

Hunter gatherer societies are humans -- they are as intelligent as we are. Superintelligences are by definition far more intelligent than us. We'd be more like fruit flies to them. Maybe they would keep some of us around to experiment on. That doesn't seem very comforting to me. Like, I just don't see us having that much to offer superintelligences via trade or some other mutually beneficial relationship, at least after some time.

> There are moral truths out there, independent of what we may think about them, just like there are physical truths out there whether or not we know of them. Denying objective truth carries the dubious moniker of subjectivism.

This is a pretty contentious position. I think you need to actually argue for this, or at least recognise that not everyone shares this premise, not just assert it as fact. (About 40% of philosophers don't accept moral realism: https://survey2020.philpeople.org/survey/results/4866)